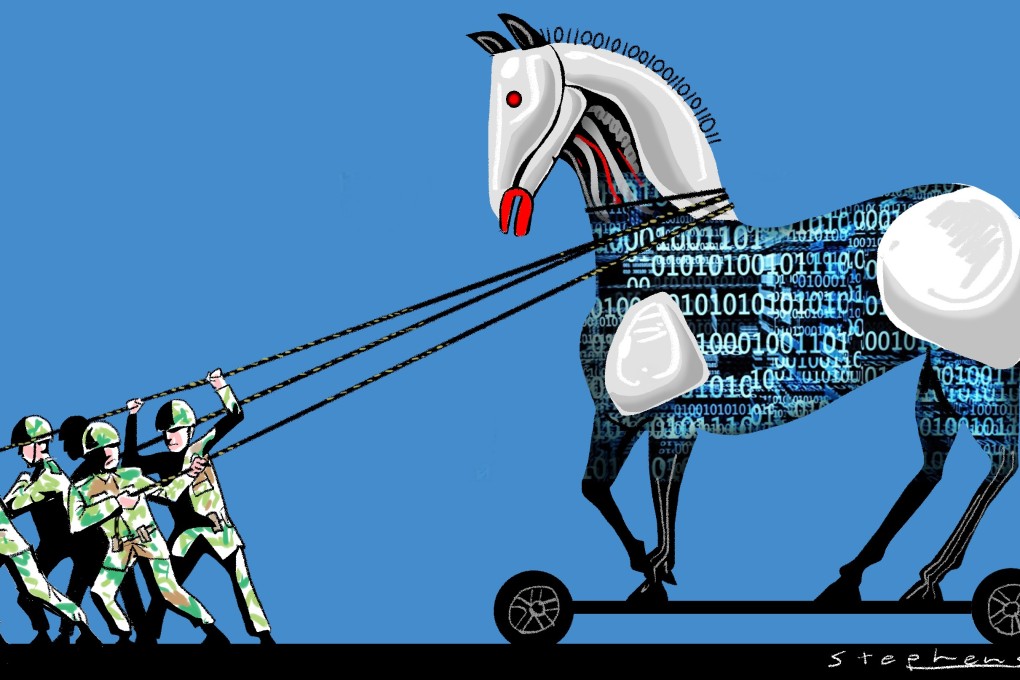

Opinion | As US, China and Russia fight for AI supremacy in new hyperwar battlefield, will good sense prevail?

- In this ‘who dares wins’ race, the US has a lead in deep-learning algorithms but China and Russia are making greater strides in applications, unencumbered by privacy or rights concerns

Will artificial intelligence (AI) ever possess the nuance, intuition, and gut instinct necessary to be a good military or intelligence analyst? The jury will be out on that question for some time, but the world’s leading governments continue to develop AI with military applications under the presumption that, eventually, machines will possess that ability. China, Russia, and the United States are in a race to determine which country will dominate the AI landscape first.

That said, US researchers have trained deep-learning algorithms to identify Chinese surface-to-air missile sites hundreds of times faster than their human counterparts. The algorithms helped individuals with no experience of imagery analysis to find the missile sites scattered across nearly 90,000 square kilometres of southeastern China, for example. The neural network used matched the 90 per cent accuracy of human experts while reducing the man-hours needed to analyse potential missile sites from 60 hours to just 42 minutes. This has proven extremely useful, as satellite imagery analysts were drowning in a deluge of big data.

At the operational level, commanders will be able to “sense”, “see” and engage enemy formations far more quickly by applying machine learning algorithms to collect and analyse huge quantities of information, and direct swarms of complex, autonomous systems to simultaneously attack the enemy.